4 Tips for Managing a High Traffic Website

In this article we are sharing with you Core dna’s tips for managing high traffic and delivering flawless performance. These strategies have been put to the test by one of the biggest ticket sales event in Australia - The Taylor Swift Era Tour.

From implementing effective caching strategies to optimizing your backend infrastructure, these best practices will ensure that your site remains responsive and resilient, even during traffic surges.

At Core dna, we use these techniques to ensure our clients are always delivering seamless user experience to their visitors. Without further due, let's dive in and explore how you can prepare your website for the demands of high traffic.

On this page:

How Core dna Managed The Eras Tour Ticket Sales Event in Australia: Taylor Swift's Era Tour

During one of the biggest concert ticket sale event in Australia - Taylor Swift Eras Tour. Core dna's implementation of caching strategies and cloud-based scalability for our client Frontier Touring proved invaluable.

The platform handled:

- 15 user registrations per second

- 850 dynamic content requests per second, without caching at certain levels.

- Over 2 days, the platform received traffic equivalent to an entire month's worth.

Despite this massive surge, the infrastructure seamlessly managed the load without any downtime, ensuring a smooth and efficient user experience.

The combination of multi-layered caching, dynamic scaling and the implementation of a "waiting room" allowed Core dna to serve thousands of simultaneous requests, maintaining fast load times and reliability. As a result, the event was a resounding success, with 570,000 tickets sold and the website performance remaining robust throughout the peak traffic period.

A record breaking event that we are extremely proud of and proud of the platform's performance in such a big and critical event. We are also grateful to our client Frontier Touring for their trust in Core dna.

Use Caching to Store Data

Caching is a crucial measure for managing high traffic on websites. The more static content that can be served from cache, the lower the load on the backend infrastructure and servers.

For effective caching, it should be implemented at different levels of the request processing lifecycle, including at the CDN, web server, and application/database levels. This multi-layered caching approach ensures that if one layer of caching is not available, the next layer can serve the content.

By implementing an effective caching strategy, you reduce the number of requests that need to be processed by the backend servers. Hence, you avoid them become overwhelmed during periods of high traffic. By caching content, the website can serve more requests from the cache rather than generating new content on the fly.

A - Use CDN

Using a content delivery networks (CDNs) when dealing with high traffic websites is mandatory. CDNs are distributed groups of servers that provide content caching all around the world.

When a user requests a page, the content is served from the CDN node closest to them, reducing response times. This distributed caching setup also protects against the failure of individual CDN nodes. If the nearest server to the user is down, the next closest node can take over, ensuring there is no single point of failure and that your site remains operational.

Traditionally, CDNs were used to cache only static assets like images, CSS, and JavaScript files, while the actual web pages were still served by the backend servers. However, for high traffic websites, the CDN should sit in front of the entire application stack, serving both the static and dynamic content.

CDNs also provide SSL offloading, handling the encryption protocol negotiation at the CDN node rather than the backend servers. This further reduces the load on the backend infrastructure.

The caching expiry times for different assets should be carefully considered, with longer expiry times (e.g. 6 months to a year) for static assets that don't change often, and shorter expiry times for more dynamic content.

As for static files and resources, it is better to store that on the user's browser/local device. When a user visits a website, the browser downloads and saves files like images, CSS, JavaScript, and HTML documents in its cache.

Upon revisiting the website, the browser can load these files from the local cache instead of downloading them again from the server, which significantly reduces load times and server requests.

B - Use server-side caching

When dealing with high traffic websites, it's important to carefully consider which content is dynamic versus static. Ideally, as much static content as possible should be cached.

For pages with dynamic elements, such as a shopping cart or login section, the dynamic parts can be separated from the static parts. The static parts can be cached, while the dynamic parts are loaded separately using JavaScript.

This separation of dynamic and static content can save thousands of requests to the web servers, as the static parts don't need to be regenerated for each request.

While using headless technologies and client-side rendering can appear efficient, traditional server-side rendering can still achieve good performance for high traffic websites by applying the principles of separating dynamic and static content.

It's important not to rely solely on CDN caching, but to also cache pages on the application servers. This ensures that if a page is not available in the CDN cache, it can still be served from the application server cache.

C - Cache objects and database queries

In addition to caching at the CDN and server levels, caching should also be implemented within the application itself, for objects and database queries.

For example, when displaying a product listing page, the stock levels for the products can be cached in a solution like Memcached, rather than querying the database for this information every time the page is loaded. This can save many non-critical database queries.

Similarly, other parts of the application's content that don't need to be the most up-to-date can be cached within the application to reduce load on the database.

This application-level caching can be an important layer of caching to supplement the CDN and server-side caching, especially for dynamic content that can't be easily cached at the higher levels.

Database caching can offload some of the query processing to your database, application, or a standalone layer. These systems temporarily hold data in memory, making data access much faster by side-stepping an SSD.

Spread Traffic Across Different Servers

Load Balancing

One essential technique for managing high traffic on websites is load balancing. Load balancers distribute incoming network traffic across multiple servers, ensuring that no single server becomes overwhelmed.

They act as intermediaries between users and the server farm, directing traffic based on algorithms such as round-robin, least connections, or IP hash. This distribution of load helps maintain a smooth and consistent user experience by preventing any single server from becoming a bottleneck.

Cloud Environments and Elastic Load Balancers

In cloud environments, elastic load balancers dynamically adjust the number of servers in response to real-time traffic patterns. This flexibility allows for seamless scaling, ensuring that resources are allocated efficiently.

Cloud platforms like AWS, Google Cloud, and Azure facilitate this process, offering scalable infrastructure on-demand. During peak traffic periods, additional servers can be quickly deployed to manage the increased load. Conversely, once traffic decreases, servers can be scaled down, optimizing costs by only utilizing necessary resources.

Auto Scaling and Monitoring

In a cloud environment, a smart load balancer should be positioned in front of the application servers. This load balancer efficiently distributes incoming requests across the server fleet, ensuring balanced resource utilization.

Monitoring resource usage, such as CPU and memory, is crucial for determining when scaling is required. Cloud platforms offer auto scaling capabilities that automatically add or remove servers based on pre-defined metrics, such as CPU usage, memory consumption, or average response times.

This automation minimizes the need for manual intervention during traffic spikes, enhancing reliability and performance.

Advantages of Multiple Smaller Instances

It is advisable to use a larger number of smaller instances rather than a few large instances.

This approach reduces the impact of a single server failure. For example, if you have four large application servers and one fails, you lose 25% of your capacity. However, with 20 smaller servers, the failure of one server results in a much smaller impact on overall capacity.

This strategy effectively mitigates risks and ensures better resilience.

Improve your Code and Database

Optimize Your Code

Optimizing database performance and efficiently managing workloads are crucial for maintaining a smooth user experience, especially under high traffic conditions.

This section covers key strategies for achieving these goals, including efficient query design, regular database maintenance, appropriate indexing, separating read and write operations, using replication for load distribution, avoiding problematic UPDATE queries, and moving non-critical workloads to the background.

1 - Use efficient queries

Poorly written queries can slow down your website by taking longer to execute and consuming excessive resources. To speed them up, you can simplify complex queries, use appropriate JOINs, and avoid unnecessary columns in SELECT statements.

Use prepared statements to prevent SQL injection attacks and allow the database to cache and reuse query execution plans. Index your queries and use EXPLAIN statements to analyze query performance and identify bottlenecks.

2 - Regularly clean and maintain the database

Over time, databases can become cluttered with outdated or irrelevant data. You should run regular maintenance to prevent degradation, including removing unused tables, archiving old data, and regularly updating statistics.

3 - Consider indexing and partitioning

Proper indexing helps the database engine find and retrieve data more quickly. Create indexes on columns that are frequently used in WHERE clauses, JOINs, and ORDER BY statements but avoid over-indexing, which can actually slow down write operations.

Database partitioning requires splitting a large database into smaller, more manageable pieces. These partitions can then be stored, accessed, and managed separately which can improve availability since you will no longer be running on a single point of failure.

4 - Separate read from write

When building high traffic web applications, it is advisable to have separate database connections for reads versus writes. In most cases, web applications have substantially more read queries than write queries.

Executing all reads and writes on the same database server can lead to issues like database locks and inefficient serving of data as it can only handle limited number of simultaneous connections. By separating these, it becomes easier to scale the read-heavy workload.

For the read queries, the application should use a read-only replica of the database. This allows the read queries to be served efficiently without contending for resources with the write queries on the master database.

5 - Avoid UPDATE Queries

When working with high traffic websites, one potential pitfall is the use of UPDATE queries on the same database row across many simultaneous requests.

For example, if the application has a counter field in a database table that is updated with each page view, a large number of simultaneous requests trying to update that same row can lead to issues. These simultaneous UPDATE queries can cause deadlocks in the database or result in the queries queuing up.

This can negatively impact the user experience, as requests have to be executed sequentially rather than in parallel.

Instead of using a single counter field that is updated, it is better to use a separate table where a new row is inserted for each page view. The total count can then be calculated periodically in the background. This approach avoids the locking issues that arise from multiple concurrent UPDATE queries on the same database row during high traffic periods.

6 - Move non-critical workloads to the background

When designing your infrastructure, it's important to consider whether certain business logic needs to be executed with every request to the web server or if it can be offloaded to background processing. This approach is especially useful in high-traffic scenarios where efficiency and user experience are paramount.

For instance, in an e-commerce platform, placing an order often involves multiple third-party integrations, such as communicating with accounting systems, CRM, or third-party logistics providers. While it is crucial to instantly notify the customer that their order has been placed, the actual communication with these third-party systems can occur in the background. This way, the customer receives immediate feedback without waiting for all background processes to complete.

To implement background processing, various queue services can be utilized. For example, AWS offers the Simple Queue Service (SQS), which allows you to enqueue tasks with metadata describing the workload. A separate queue worker, running on a different server, periodically checks the queue for new jobs and processes them as they arrive.

This separation ensures that mission-critical web servers are not bogged down by non-critical tasks, thereby enhancing user experience and maintaining application performance.

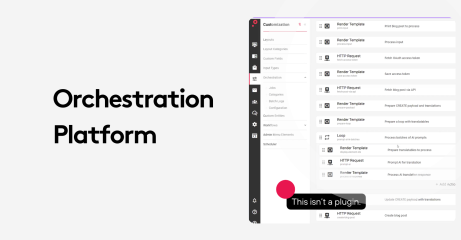

Use micro-services

Micro services architecture further enhances this approach by breaking down an application into smaller, independently deployable services. Each micro service handles a specific aspect of the application's functionality, allowing for better scalability and manageability.

For example, a product details page in an online store may need to display dynamic data such as product availability across multiple warehouses and related product recommendations. Instead of processing all this information in a single, potentially slow request, it’s more efficient to split the page into smaller components.

Each component can be loaded via separate web requests using JavaScript, allowing the page to load critical information first and fetch additional data asynchronously.

This method of handling multiple, smaller requests, rather than a single large one, is particularly beneficial for high-traffic websites. It reduces the load on any single server and prevents bottlenecks, ensuring a smoother and faster user experience.

For instance, on a homepage with cached static content and several dynamic sections, loading these sections via separate requests helps maintain overall page performance without overloading the servers.

Protect your website

When dealing with high traffic loads, it's important to consider not just the legitimate traffic, but also potential malicious traffic. Properly filtering DDoS attacks and bots attempting to access your website will protect your infrastructure.

Here are a few tips on what to implement to protect your infrastructure.

Use a web application firewall

Use a web application firewall (WAF) to protect the infrastructure from malicious traffic and attacks. The WAF can provide fine-grained controls and validation techniques to guard against things like DDoS attacks and automated bots. WAF's also safeguard your website against common threats like SQL injection and cross-site scripting. WAFs filter and monitor HTTP traffic to block malicious requests before they reach your website.

Use distributed denial of service (DDoS) protection services

DDOS flood your website with traffic, overwhelming servers and causing outages. Firewalls and Access Control Lists (ACLs) can help control what traffic reaches your applications by recognizing and blocking illegitimate requests. Also, CDNs and load balancers restrict traffic to certain parts of your infrastructure, like your database servers, reducing the area of attack.

Consider adding a waiting room

During periods of extremely high traffic, we suggests implementing a "waiting room" feature to handle traffic spikes. The waiting room temporarily hold users in a queue before allowing them to access the application. This "waiting room" approach prevents the application from being overloaded and crashing, maintaining availability even during the highest traffic periods. This feature would likely involve techniques like load balancing, rate limiting, and queueing to manage the influx of users and distribute the load across the infrastructure.

Conclusion

Successfully managing a high-traffic website is an exciting opportunity. Having an influx of visitors to your website can boost your business, increase revenue, and elevate your brand visibility, all of which are good things.

But doing it sustainably requires a comprehensive strategy. If you don’t focus on maintaining performance, security, and user experience, you can alienate customers in mere seconds.

Make the right server and hosting choice, implement effective caching strategies, optimize your code and content, enhance database performance, and do not overlook the importance of having robust security measures. A fast, secure, and reliable website is key to retaining visitors and turning high traffic into lasting success.